AI Detection versus Cryptographic Provenance

During SXSW 2023, Greg Brockman discussed how large language models (LLMs) challenge traditional notions of truth, authenticity, and creative ownership. The rise of AI-generated text, images, and even deepfake videos has made it increasingly difficult to distinguish what is real from what is artificially generated.

From news organizations to schools to user-generated content platforms, they are all clamoring for solutions to detect artificially generated content. So how do we prove what's real anymore?

This has led to the emergence of numerous startups and tools aiming to identify AI-generated text, images, audio, and video. As of March 2025, examples include GPTZero, Copyleaks, Decopy AI Detector, Scribbr AI Detector and more...

Some of these solutions claim 99% accuracy (until they quietly roll that number back after real-world tests). Others take the security theater approach, offering expensive enterprise solutions that amount to a probabilistic guess wrapped in a glossy UI.

AI Detection is a Waste of Time because it Does Not Scale

The problem is that AI detection is an arms race that cannot be won. The moment detectors improve, generative AI adapts to evade them. Both are trained using the same fundamental techniques, ensuring that detection will always be reactive rather than proactive. Since AI detectors rely on past data to recognize patterns, they can only flag content that fits known characteristics of AI generation. Meanwhile, AI models can continuously evolve to eliminate those characteristics, rendering previous detection methods ineffective.

This is the core dynamic of Generative Adversarial Networks (GANs): a continuous back-and-forth where detection improvements directly drives the refinement of generation techniques. Every time AI detection advances, it introduces new challenges for AI developers to overcome. Previously detectable traits, such as unnatural textures or irregular text coherence, disappear in newer model iterations. Detection benchmarks become part of the dataset for training next-generation AI, making forensic methods quickly obsolete.

Research in Adversarial AI has demonstrated how easily deep learning models can be fooled by minor modifications to input data. Small, imperceptible pixel changes can cause classifiers to misidentify an image. A well-placed sticker on a Stop sign can trick an AI into reading it as a Speed Limit sign. The same principle applies to AI-generated content, once detection models are publicly available, they serve as a benchmark for evasion.

For AI detection to stay ahead, it would need to anticipate and classify future AI-generation techniques before they exist, which is computationally infeasible. The space of possible AI-generated outputs is too large, and there is no fixed "fingerprint" of AI content that detectors can reliably target. Any pattern a detector identifies can be removed in the next model iteration. More aggressive detectors risk misclassifying human-created content, while more conservative ones become ineffective against rapidly evolving AI. This tradeoff between false positives and false negatives makes AI detection an unreliable long-term solution.

Ultimately, AI detectors are locked in a perpetual defensive position, always reacting to new developments rather than preempting them. Every detection breakthrough feeds directly into the next generation of AI, strengthening it. The fundamental asymmetry between AI detection and generation ensures that detection will always be one step behind.

Relying on AI Detection Creates a Problem of Trust

Even if AI detection were effective, it raises a deeper issue: trust. Who controls these detection systems? Who verifies their accuracy? AI detection models function as opaque black boxes, making unverifiable claims about content authenticity. They just provide a probabilistic output that users are expected to trust. If an AI model flags a piece of content as artificially generated, how does someone challenge that claim? What recourse is there for those falsely accused of using AI-generated content? Without transparency or an independent verification mechanism, AI detection creates a new layer of authority that operates without accountability.

This problem extends beyond misclassification. If platforms rely on AI detection to filter or moderate content, it places significant power in the hands of whoever controls the detection models. AI detection can be abused, whether intentionally or as a side effect of systemic bias. If an AI classifier is treated as an arbiter of truth, it can be used to flag inconvenient content, silence dissent, or falsely discredit legitimate material. We've already seen real images being wrongfully flagged as AI-generated, raising concerns about false positives in high-stakes scenarios. This risk grows when platforms and governments start relying on AI detection at scale, as the ability to challenge an incorrect classification becomes nearly impossible.

This centralization of authority on what's real and what's not introduces a new failure mode: what happens when the detection system itself is wrong? If detection models are treated as infallible, their mistakes become indistinguishable from deliberate manipulation. A flawed detection system doesn't just misclassify content, it creates an environment where authenticity is dictated rather than demonstrated. If a system cannot be challenged or independently verified, then it cannot be trusted as a solution to the problem it claims to solve.

Cryptographic Provenance is the Only Solution

Cryptographic provenance offers an approach fundamentally different from AI detection, it does not attempt to guess whether something is real or fake but instead provides verifiable proof of origin. Unlike probabilistic classifiers, cryptographic provenance ensures that content can be traced back to its source in a way that is independently verifiable and tamper-proof.

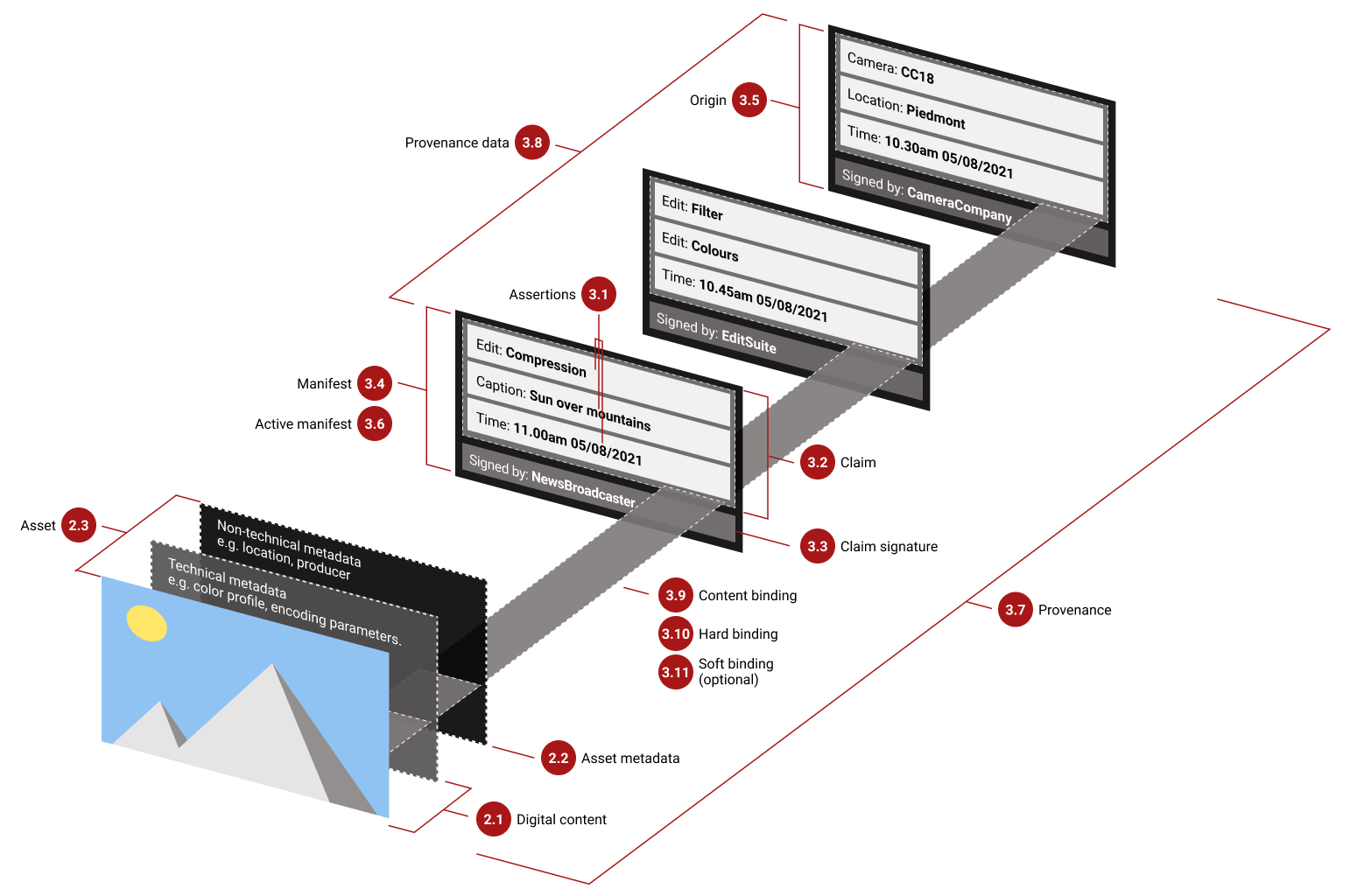

Cryptographic provenance is based on digital signatures and cryptographic hashing. When a piece of content such as an image, video, or document is created, a cryptographic hash is generated. This hash is a unique fingerprint of the content, which changes completely if even a single pixel or letter is modified. The hash is then signed by a trusted entity, such as the camera, software, or organization capturing the content. This signature proves that the content was recorded or created at a specific point in time and has not been altered since.

Because cryptographic hashes are computationally infeasible to forge, they provide strong guarantees of authenticity. Unlike metadata, which can be easily edited or removed, cryptographic provenance is tied directly to the content itself. This allows anyone to verify the integrity of a file without relying on a centralized authority. If a file has been modified, its cryptographic hash will no longer match, making tampering immediately detectable.

One of the most widely adopted provenance solutions is the Coalition for Content Provenance and Authenticity (C2PA), an initiative backed by Adobe, Microsoft, Intel, and Google. C2PA embeds cryptographic metadata into media files at the point of creation, recording details such as the source, timestamp, and any modifications made to the content. The goal is to enable verification of images and videos by checking their metadata against a trusted ledger of provenance records.

While C2PA represents a step toward verifiable media authenticity, it has limitations. The most immediate issue is that metadata can be stripped. A file signed with C2PA provenance can be downloaded, altered, and re-uploaded without its original metadata, making verification impossible. Many platforms already strip metadata by default for privacy reasons, and unless every major platform enforces C2PA metadata retention, unverified copies will continue to circulate.

Another limitation is that C2PA does not verify whether the content was manipulated before it was recorded. If an AI-generated deepfake is signed at the moment of creation, it appears just as authentic as real footage. Provenance alone does not determine truth, it only tracks the history of a file, not whether the content itself is genuine.

Beyond technical concerns, cryptographic provenance runs into a broader issue: centralization. For provenance systems like C2PA to be widely adopted, they rely on a small number of trusted organizations to issue and verify content signatures. This creates a single point of control over what is considered "authentic." If a handful of companies act as gatekeepers for verification, they have the power to dictate which content is considered real and which is not.

This introduces the same risks as AI detection: censorship, misclassification, and manipulation. If platforms or governments require provenance verification for content distribution, content without cryptographic signatures could be devalued or even suppressed. A centralized verification system does not eliminate misinformation; it simply shifts control over authenticity from AI classifiers to a small group of organizations.

Cryptographic provenance is an important step toward solving the problem of digital authenticity, but its implementation matters. If verification remains centralized, it risks becoming another authority-based system where trust is dictated rather than independently verifiable. The challenge is not just proving where content comes from, but ensuring that no single entity has the power to control what is considered real.

Introducing a Decentralized Trust Network

Centralized provenance systems, like C2PA, introduce a single point of control over what is considered "authentic," making them vulnerable to manipulation and censorship. A Decentralized Trust Network (DTN) solves this problem by distributing trust across independent participants rather than concentrating authority in a handful of entities.

A DTN does not rely on metadata embedded within content, which can be easily stripped or altered. Instead, it allows content authenticity to be claimed on a distributed ledger, ensuring that provenance remains verifiable even if the original file is modified or reuploaded. More importantly, DTNs recognize that truth is not absolute. It is socially constructed. Authenticity should not be dictated by a single gatekeeper but established through multiple independent claims that can be corroborated, challenged, and verified.

Polykey's Decentralized Trust Network (DTN) is designed to provide cryptographic verification of content while avoiding the pitfalls of centralized control. It achieves this through cryptographic fingerprints, independent Polykey nodes that store and verify trust data, and Gestalts, trust collectives that allow for flexible, socially or institutionally scoped verification. Unlike traditional blockchains, Polykey's DTN does not require slow or energy-intensive consensus mechanisms. Instead of securing financial transactions, it is optimized for verifying authenticity efficiently through cryptographic signatures and peer validation. This makes it scalable while eliminating unnecessary computational overhead.

A DTN ensures that content's existence and integrity are verifiable, but it also establishes who created it and whether it has maintained its integrity over time. This is achieved through Signature Chains (SigChains), which form the backbone of Polykey's DTN. Each Polykey node maintains its own SigChain, where all claims are automatically signed by the node’s own authority, creating an immutable cryptographic record of attestations that can be independently verified.

Because all nodes in the DTN are part of a Gestalt, SigChains inherit the real-world identity associations of the Gestalt itself. Gestalts are cryptographically linked to external digital identities such as Instagram, X, Facebook, Nostr, or other third-party verification sources. This means that when a Polykey node issues a claim, it is not just a detached cryptographic assertion, it is rooted in a broader web of trust, where multiple independent entities corroborate authenticity. This structure enables trust delegation without centralizing control, ensuring that verification remains distributed while allowing for real-world accountability.

Polykey's DTN recognizes that truth is often socially determined. The current media landscape prioritizes speed over accuracy. News outlets, influencers, and platforms rush to publish first, even if the information is later retracted. By the time corrections are issued, the impact has already been made. A decentralized trust network shifts this dynamic by incentivizing accuracy over speed. SigChains allow multiple independent authorities to make corroborating claims on reality, supported by verifiable content. This creates a reputation-based system where those who stake their trust on false claims suffer credibility losses, reducing the incentive to publish misleading or inaccurate information. Unlike traditional provenance models, which rely on static metadata, Polykey's DTN enables a more dynamic and robust system of truth verification.

The key lesson here is that it doesn't actually matter whether content is AI-generated or not. What matters is the underlying truth claim that the content represents. AI-generated media is not inherently false, just as human-created media is not inherently true. A deepfake can be labeled as such while still being a valid satirical expression, and an unedited video can still be used out of context to mislead. The problem is not how content is created but what it claims to represent. This is why focusing on AI detection is the wrong problem. Provenance is not about filtering AI out of the conversation but about ensuring that claims about reality can be verified in a decentralized and accountable way.

Polykey's DTN is more than just a cryptographic provenance system. It represents the next phase of decentralized authority management. While Polykey began as a system for managing digital secrets and access control, the same principles of distributed trust apply to content authenticity, identity verification, and broader claims about reality. In a world where AI-generated content is becoming indistinguishable from reality, we need a trust system that is verifiable, resilient, and independent of centralized control. The future of digital trust is not about fighting AI, but about creating systems that allow us to verify the truth, no matter how it is expressed.